Notes — full feed

Basic facts about GPUs

I’ve been trying to get a better sense of how GPUs work. I’ve read a lot online, but the following posts were particularly helpful:

- Making Deep Learning Go Brrrr From First Principles

- What Shapes Do Matrix Multiplications Like?

- How to Optimize a CUDA Matmul Kernel for cuBLAS-like Performance: a Worklog

This post collects various facts I learned from these resources.

Acknowledgements: Thanks to Alex McKinney for comments on independent thread scheduling.

Table of Contents

- Compute and memory hierarchy

- Two Performance Regimes: Memory-Bound and Compute-Bound

- The Third Regime: Overhead

- Two basic strategies for increasing performance: Fusion and Tiling

- Additional Performance Considerations

Compute and memory hierarchy

A GPU’s design creates an imbalance since it can compute much faster than it can access its main memory. An NVIDIA A100 GPU, for example, can perform 19.5 trillion 32-bit floating-point operations per second (TFLOPS), but its memory bandwidth is only about 1.5 terabytes per second (TB/s). In the time it takes to read a single 4-byte number, the GPU could have performed over 50 calculations.

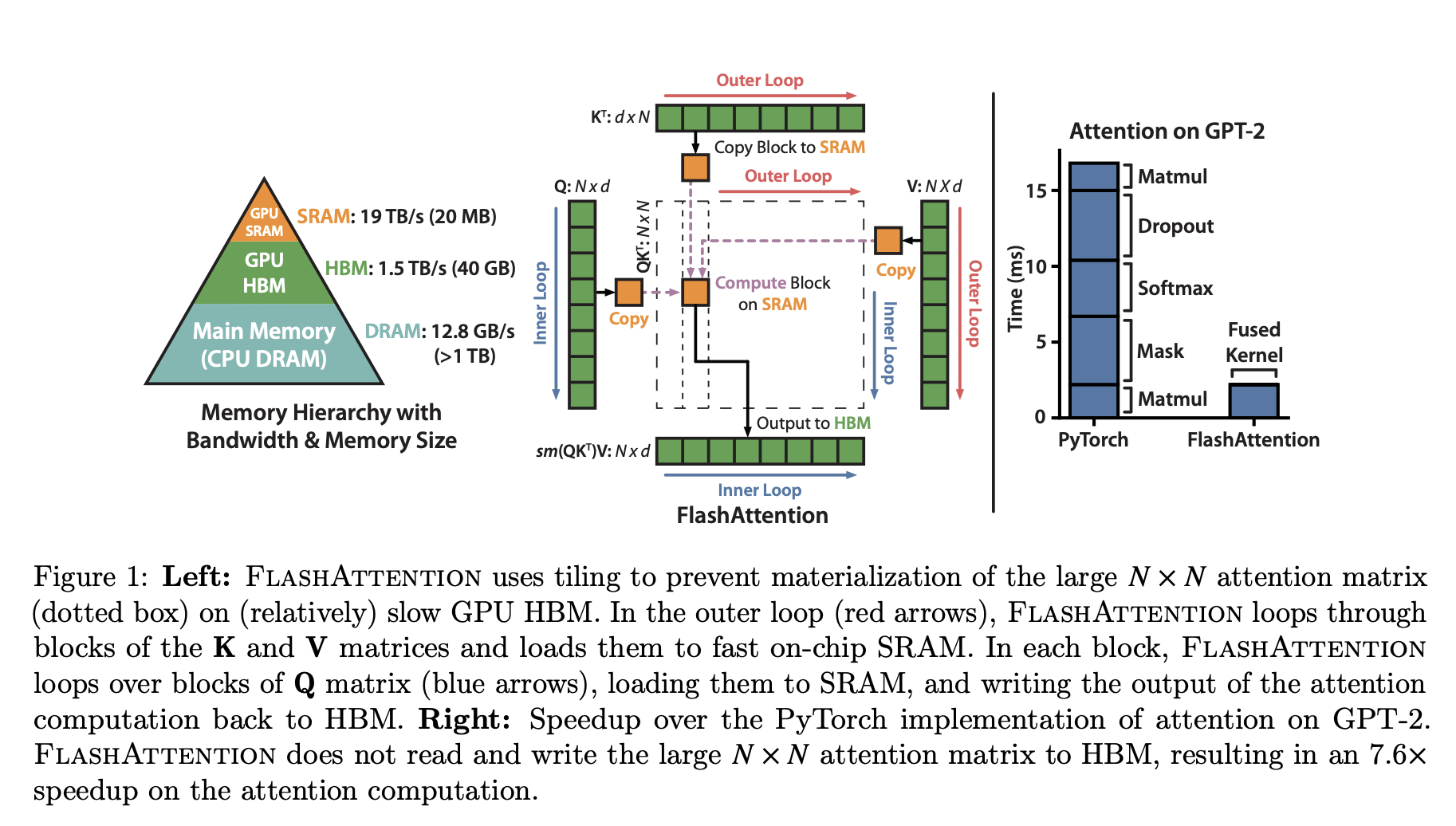

Below is a diagram of the compute and memory hierarchy for an NVIDIA A100 GPU. The numbers I quote for flops/s and TB/s are exclusive to A100s.

+---------------------------------------------------------------------------------+

| Global Memory (VRAM) |

| (~40 GB, ~1.5 TB/s on A100) |

+----------------------------------------+----------------------------------------+

| (Slow off-chip bus)

+----------------------------------------v----------------------------------------+

| Streaming Multiprocessor (SM) |

| (1 of 108 SMs on an A100, each ~(19.5/108) TFLOPS) |

| (2048 threads, 64 warps, 32 blocks) |

| +-----------------------------------------------------------------------------+ |

| | Shared Memory (SRAM) / L1 Cache |

| | (~192 KB on-chip workbench, 19.5 TB/s) |

| +-----------------------------------------------------------------------------+ |

| | Register File (~256 KB, ? TB/s) |

| +-----------------------------------------------------------------------------+ |

| | | |

| | //-- A "Block" of threads runs on one SM --// | |

| | +--------------------------+ +------------------------+ | |

| | | Warp 0 (32 thr) | | Warp 1 (32 thr) | ... (up to 32 warps)| |

| | | +----------------------+ | +----------------------+ | | |

| | | | Thread 0 Registers | | | Thread 32 Registers | | | |

| | | | [reg0: float] | | | [reg0: float] | | | |

| | | | [reg1: float] ... | | | [reg1: float] ... | | | |

| | | +----------------------+ | +----------------------+ | | |

| | +--------------------------+ +------------------------+ | |

| | | |

+---------------------------------------------------------------------------------+

This diagram shows the performance hierarchy.1 Global Memory (VRAM) is the large, slow, off-chip memory pool where all data initially lives. A Streaming Multiprocessor (SM) is the GPU’s unit of computation. To work, it must fetch data over the slow bus. To mitigate this, each SM has fast, on-chip Shared Memory (SRAM) with a bandwidth of 19.5 TB/s.2 Programmers use this as a manually-managed cache.

A thread is the smallest unit of execution. Each thread has a private set of Registers to hold values for immediate computation, with access speeds over ?? TB/s.3 The hardware groups threads into Warps of 32. This post analyzes performance using the simplified model of lockstep execution, where all 32 threads in a warp execute the same instruction at the same time.4 On an A100, an SM has an upper limit of 64 warps. A programmer groups threads into a Block, a grid of threads that is guaranteed to run on a single SM. A block can be one, two, or three-dimensional. For simplicity, this post will focus on square two-dimensional blocks of BLOCK_DIM x BLOCK_DIM threads, where the total number of threads cannot exceed the hardware limit of 1024. All threads in a block share access to the same on-chip Shared Memory.

The Two Performance Regimes

We analyze the performance of a kernel, which is a function launched by the host (CPU) to be executed in parallel by many GPU threads. A kernel’s performance is limited by either its memory bandwidth or its compute throughput. These two limits define the performance regimes.

An operation is memory-bound if its runtime is dictated by the speed of transferring data from Global Memory to the SM. For an operation like element-wise addition y = x + 1, the SM performs a trivial number of FLOPs for each element it reads. The SM spends most of its time idle, waiting for data.

An operation is compute-bound if its runtime is dictated by the SM’s arithmetic speed. A large matrix multiplication is the canonical example. Once data is loaded into the SM, a massive number of computations are performed. The memory bus is idle while the SM is busy.

Arithmetic Intensity (AI) is the formal metric that determines the regime. It is the ratio of work to memory traffic.

Arithmetic Intensity = Total FLOPs / Total Bytes Accessed

For the Roofline model, Total Bytes Accessed specifically counts the data transferred between Global Memory (HBM) and the on-chip SM. This is because the model evaluates a kernel’s performance against the primary bottleneck: the slow off-chip memory bus. On-chip traffic, such as from Shared Memory to registers, is not included in this calculation.

The Roofline Model plots a kernel’s achievable performance (in FLOPs per second) against its AI. The two “roofs” are the hard physical limits of the GPU.

^ Performance (TFLOPS)

|

| Memory-Bound Region ¦ Compute-Bound Region

| ¦

| /¦---------------------- <-- Peak Compute (~19.5 TFLOPS)

| / ¦

| / ¦

| Peak Global /<--¦------ Inefficient Compute Roof (e.g., using scalar ops, transcendental functions)

| Mem BW (~1.5 / ¦

| TB/s) / ¦

| / ¦

+---------------------¦---------------------------> Arithmetic Intensity (FLOPs/Byte)

^

¦

Hardware Ridge Point (~13)

The performance of a kernel is determined as follows:

- When memory-bound, the SMs are stalled waiting for data. The runtime is the time it takes to move that data:

Runtime = Bytes_Accessed / Memory_Bandwidth. The kernel’s performance is thereforePerformance = Total_FLOPs / Runtime = AI * Memory_Bandwidth. On the log-log plot, this is the diagonal line. - When compute-bound, the SMs are fully utilized. The performance is limited by their peak arithmetic throughput:

Performance = Peak_Compute_FLOPs. This is the horizontal line.

A kernel’s actual performance is the minimum of these two values. The ridge point is the AI where the two performance ceilings intersect. For the A100, this is 19.5 TFLOPS / 1.5 TB/s ≈ 13 FLOPs/Byte. A kernel must exceed this AI to become compute-bound. A kernel with AI lower than 13 operates in the memory-bound region; a kernel with AI higher than 13 operates in the compute-bound region. The goal of optimization is to increase AI to move the kernel’s operating point to the right, pushing its performance up until it hits the compute roof.

The “Peak Compute” roof of 19.5 TFLOPS is an ideal, achievable only with highly optimized instructions like Tensor Core matrix multiplications and high enough power limits. An operation can be compute-bound but still perform far below this peak. For example, a kernel with high AI that is dominated by scalar arithmetic or complex transcendental functions (sin, exp) will be limited by the throughput of those specific, slower instructions. This creates a lower effective “roof” for that kernel, as shown in the diagram. Increasing AI is necessary, but not sufficient; the FLOPs must also be efficient.

The primary strategy to increase AI is to maximize the reuse of data once it has been loaded into the SM’s fast on-chip memory. The following is a simplified model where a thread reads data from Global Memory directly into its private registers. This analysis calculates the minimum required data transfer; actual memory traffic depends on access patterns, which we will discuss later.

Consider computing C = A@B, where all matrices are N x N and use 4-byte floats.

Strategy 1: One thread computes one element C[i,j]

- FLOPs: To compute

C[i,j], the thread performs N multiply-add operations. This is2*NFLOPs. - Bytes Accessed: The thread must read row

iof A (N floats) and columnjof B (N floats). This is a total of2*Nfloats, or8*Nbytes. - Arithmetic Intensity:

(2*N FLOPs) / (8*N Bytes) = 0.25 FLOPs/Byte.

This AI is low. The kernel will be memory-bound.

Strategy 2: One thread computes a 2x2 tile of C

To compute a 2x2 tile (C[i,j], C[i,j+1], C[i+1,j], C[i+1,j+1]), the thread must perform the computation for all four elements.

- FLOPs:

4 elements * 2*N FLOPs/element = 8*NFLOPs. - Bytes Accessed: The thread must read two rows from A (

A[i,:],A[i+1,:]) and two columns from B (B[:,j],B[:,j+1]). This is2*N + 2*N = 4*Nfloats, or16*Nbytes. - Arithmetic Intensity:

(8*N FLOPs) / (16*N Bytes) = 0.5 FLOPs/Byte.

These AI values are far below the A100’s ridge point of ~13 FLOPs/Byte. This simple register-only model is insufficient to make matrix multiplication compute-bound.5 The key to achieving high AI is for threads within a block to cooperate by loading a much larger tile of A and B into the shared, on-chip SRAM. By working together on this shared data, a block of 1024 threads can achieve an AI greater than 13. We will detail the mechanics of this in the section on Shared Memory.

The Third Regime: Overhead

Performance can also be limited by host-side overhead. This is time the CPU (the host) spends preparing work for the GPU, for example, in the Python interpreter or a framework’s dispatch system.

An application is overhead-bound if its GPU kernels are too small or numerous. The GPU executes each small task quickly and then waits, idle, for the CPU to issue the next command. The runtime is dominated by the CPU’s inability to feed the GPU fast enough.

Modern frameworks use asynchronous execution to mitigate this. The host can queue a stream of commands for the GPU without waiting for each one to complete. If the individual GPU operations are sufficiently large, the host can “run ahead,” and the overhead of launching one kernel is hidden by the execution of the previous one.

For the remainder of this post, we assume our kernels are large enough that overhead is not the primary limiter, and focus instead on memory and compute.6

Two basic strategies for increasing performance: Fusion and Tiling

With the kernel large enough to make launch overhead negligible, performance is governed by the two physical limits of the GPU: memory bandwidth and compute throughput. Increasing the performance of a kernel, therefore, means pushing its operating point on the Roofline model up and to the right. There are two basic strategies for achieving this.

- For a sequence of individually memory-bound operations, the strategy is to fuse them into a single kernel to eliminate intermediate memory traffic.

- For a single, complex operation with high potential arithmetic intensity (like matrix multiplication), the strategy is to use tiling to maximize data reuse within the SM’s fast memory.

We will address each strategy in turn.

Operator Fusion

Chains of simple operations like y = relu(x + 1) are common. Each operation (add, relu) has a very low arithmetic intensity and is memory-bound. Executing them as separate, sequential GPU kernels is inefficient. The primary strategy to optimize these sequences is operator fusion.

The problem is the intermediate memory traffic. Consider the unfused execution of y = relu(x + 1):

- Kernel 1 (

add): Reads the entire tensorxfrom global memory. Computestmp = x + 1. Writes the entire intermediate tensortmpback to global memory. - Kernel 2 (

relu): Reads the entire tensortmpfrom global memory. Computesy = relu(tmp). Writes the final tensoryback to global memory.

This approach is wasteful. It involves two separate kernel launch overheads and forces a round-trip to slow global memory for the intermediate tmp tensor.

Fusion combines these steps into a single, more efficient GPU kernel. A JIT compiler like Triton or torch.compile’s Inductor backend can perform this transformation automatically.

In the fused kernel:

- A single thread reads one element of

xfrom global memory into its private registers. - It performs all computations, i.e.,

tmp = x + 1, theny = relu(tmp), entirely within those fast registers. - It writes only the final result

yback to global memory.

# Unfused (Conceptual)

def unfused_add_relu(x):

tmp = torch.add(x, 1) # Reads x from HBM, writes tmp to HBM

y = torch.relu(tmp) # Reads tmp from HBM, writes y to HBM

return y

# Fused (Conceptual)

@torch.compile

def fused_add_relu(x):

# The compiler fuses these into one kernel.

# The intermediate result of x+1 never touches HBM.

return torch.relu(x + 1)

The intermediate tensor tmp becomes ephemeral, never materializing in global memory. This cuts the memory traffic in half (one read of x, one write of y) and eliminates the launch overhead of the second kernel.

Tiling: Strategy for Compute-Bound Kernels

Our register-only model for C=A@B yielded an arithmetic intensity of 0.25 FLOPs/Byte, far below the A100’s ridge point of ~13. This is because a single thread reads 2*N values to perform 2*N FLOPs; the data is used once and then discarded. To increase data reuse and become compute-bound, threads within a block must cooperate to load large tiles of the input matrices into the SM’s fast, on-chip Shared Memory.

The logic of this cooperation is based on decomposing the matrix product. The calculation for a single element C[i,j] is a sum over the k dimension: C[i,j] = sum_k A[i,k] B[k,j]. This sum can be partitioned into a sum of partial sums over tiles. For square tiles, the inner k dimension is broken into tiles of size BLOCK_DIM, matching the outer dimensions. The formula becomes:`

The tiling algorithm computes one term from the outer sum (one partial product) per iteration. A block of threads computes one output C_tile by iterating through the k dimension, loading tiles of A and B, computing their product on-chip, and accumulating the result. This is achieved with a three-phase pattern: Load, Synchronize, and Compute.

# Conceptual algorithm for one thread block computing one output tile, C_tile.

# C_tile corresponds to, e.g., C[block_row_start:end, block_col_start:end].

# Each thread in the block holds a piece of C_tile in its registers. Initialize to zero.

thread_private_C_accumulator = zeros(...)

# Loop over tiles of A and B along the k-dimension.

# Each iteration computes one partial product from the sum above.

for k_tile_idx in range(NUM_K_TILES):

# Phase 1: Load

# All threads in the block cooperate to load one tile of A and one tile of B

# from slow Global Memory into fast Shared Memory.

A_tile = load_A_tile_from_global_mem(k_tile_idx)

B_tile = load_B_tile_from_global_mem(k_tile_idx)

# Phase 2: Synchronize

# Wait for all threads to finish loading before any thread starts computing.

# This ensures A_tile and B_tile are fully populated.

__syncthreads()

# Phase 3: Compute

# Each thread computes its piece of the on-chip matmul.

# The data in A_tile and B_tile is reused extensively from Shared Memory.

thread_private_C_accumulator += on_chip_matmul_piece(A_tile, B_tile)

# Wait for all threads to finish computing before loading the next tile.

__syncthreads()

# After the loop, write the final accumulated result to Global Memory.

write_C_tile_to_global_mem(thread_private_C_accumulator)

We now examine the mechanics of the three-phase Load, Synchronize, Compute pattern.

The Coalesced Load: HBM to SRAM

The first phase loads tiles of A and B from slow global memory (HBM) into fast on-chip Shared Memory (SRAM). The goal is to perform this transfer with the maximum possible memory bandwidth. This requires coalesced memory access. A memory access is coalesced when all 32 threads in a warp access a single, contiguous 128-byte block of HBM in one transaction.

To achieve this, the kernel maps thread indices to memory addresses. For a BLOCK_DIM x BLOCK_DIM block of threads loading a data tile of the same size, a common mapping is for thread (tx, ty) to be responsible for loading A[global_row + ty, global_k + tx] into A_tile[ty, tx] in Shared Memory. In this example, BLOCK_DIM is 32.

Consider a single warp of threads where ty is fixed and tx ranges from 0 to 31.

- Thread

(0, ty)readsA[global_row + ty, global_k + 0]. - Thread

(1, ty)readsA[global_row + ty, global_k + 1]. - …

- Thread

(31, ty)readsA[global_row + ty, global_k + 31].

Assuming row-major storage, these threads access 32 consecutive 4-byte floats, a contiguous 128-byte segment. This is a perfect coalesced read. The entire 32x32 tile is loaded in 32 such coalesced reads, one for each warp in the block.

Thread Block (32x32) Global Memory (HBM)

(One row of A's tile)

+--------------------+

| Warp 0 (ty=0) | ----> [A_ij, A_i,j+1, ..., A_i,j+31] (128 bytes)

| (tx = 0..31) | (One coalesced memory transaction)

+--------------------+

| Warp 1 (ty=1) | ----> [A_i+1,j, ..., A_i+1,j+31] (128 bytes)

+--------------------+

| ... |

+--------------------+

| Warp 31 (ty=31) | ----> [A_i+31,j, ..., A_i+31,j+31] (128 bytes)

+--------------------+

This load can be made more efficient with vectorized access. The physical memory transaction for a coalesced read fetches the full 128 bytes from HBM regardless. The difference is how the SM requests this data.

With scalar loads, the warp must issue 32 separate 32-bit load instructions. With vectorized loads, it issues only 8 wider 128-bit load instructions. This is more efficient because the SM has a limited number of instruction issue slots per clock cycle. Requesting the data with 8 wide instructions consumes fewer of these hardware resources than requesting it with 32 narrow instructions. This ensures the memory controller is kept busy with a continuous stream of full-width requests, increasing the utilized memory bandwidth by reducing SM-side bottlenecks.

Vectorized access is enabled by casting pointers in device code (e.g., from float* to float4*), promising the compiler that the memory is aligned to the vector size.

The efficiency of these vectorized loads relies on memory alignment. A single float4 instruction loads a 16-byte vector. For a matrix of 4-byte floats, this vector contains 4 elements. The hardware executes this instruction efficiently only if the memory address is a multiple of 16. This means the matrix’s inner dimension K (the number of columns) must be a multiple of 4. If K is not a multiple of 4, the rows become misaligned with the 16-byte memory segments.

Consider a matrix of 4-byte floats and a memory system with 16-byte segments.

- Aligned (K=8, a multiple of 4):

Memory: |<--- 16B --->|<--- 16B --->| [Seg 0 ][Seg 1 ] Row 0: [e0 e1 e2 e3 | e4 e5 e6 e7] (A float4 load for e0-e3 is aligned) Row 1: [e0 e1 e2 e3 | e4 e5 e6 e7] (A float4 load for e0-e3 is aligned) - Unaligned (K=7):

Memory: |<--- 16B --->|<--- 16B --->|<--- 16B --->| [Seg 0 ][Seg 1 ][Seg 2 ] Row 0: [e0 e1 e2 e3 e4 e5 e6] Row 1: [e0 e1 e2 e3 e4 e5 e6] (A float4 load for Row 1's e0-e3 spans Seg 0 and Seg 1)This misalignment forces the hardware to issue more complex, slower load operations, reducing memory bandwidth.7

Important: This row-wise strategy provides coalesced access for matrix A. For matrix B, the required access patterns are in opposition.

- HBM Requirement: To maintain coalescing, the B tile must be read from HBM row-by-row.

- Compute Requirement: The matrix multiplication itself requires access to columns of the B tile.

Loading columns directly from a row-major matrix is an uncoalesced, strided access that serializes HBM transactions. The solution is therefore to load the B tile using coalesced row-reads, but then rearrange the data as it is written into Shared Memory. The structure of this rearrangement is dictated by the physical, banked architecture of Shared Memory.

Synchronization

The __syncthreads() call acts as a barrier. No thread in the block proceeds until all threads have reached this point. This ensures the A_tile and B_tile are fully loaded into Shared Memory before the compute phase begins.8:

The On-Chip Hardware: Banks and Warps

Shared Memory is a physical resource located on the Streaming Multiprocessor (SM). When a thread block is scheduled to run on an SM, it is allocated a portion of that SM’s total Shared Memory for its exclusive use.

The Shared Memory is physically partitioned into 32 independent memory modules of equal size, called banks. These banks can service memory requests in parallel. This number is not arbitrary; it is matched to the warp size. Recall that a warp consists of 32 threads that execute instructions in lockstep, and it is the fundamental unit of memory access. The 32 banks are designed to serve, in parallel, the 32 memory requests from a single warp in one clock cycle, provided those requests target different banks.

Addresses, representing 4-byte words, are interleaved across the banks.

bank 0: [word 0, word 32, word 64, ...]

bank 1: [word 1, word 33, word 65, ...]

...

bank 31: [word 31, word 63, word 95, ...]

The bank for a given word address is determined by bank_id = address % 32.

The Bank Conflict Problem

To achieve the full bandwidth of Shared Memory, the 32 threads of a warp must access words that fall into 32 different banks. A bank conflict occurs when multiple threads access different addresses that map to the same bank. The hardware resolves this by serializing the requests, reducing bandwidth. A broadcast, where all threads read the same address, is a fast, conflict-free operation.

This creates a problem for matrix multiplication. Consider a BLOCK_DIM x BLOCK_DIM tile stored in Shared Memory in a row-major layout, where BLOCK_DIM=32. The address of tile[row, col] is row * 32 + col.

- Row Access (A_tile): A warp accesses

A_tile[fixed_row, t]fort = 0..31. The addresses arefixed_row * 32 + t. The bank for each threadtis(fixed_row * 32 + t) % 32 = t % 32. Sincetis unique for each thread, the threads access 32 unique banks. This is a conflict-free, full-bandwidth access. - Column Access (B_tile): A warp accesses

B_tile[t, fixed_col]fort = 0..31. The addresses aret * 32 + fixed_col. The bank for each threadtis(t * 32 + fixed_col) % 32 = fixed_col % 32. All 32 threads target the same bank. This causes a 32-way bank conflict, serializing the memory access.

The solution is to store the B_tile in a transposed layout within Shared Memory.

# Action for thread (tx, ty) during the load phase

# A is loaded directly, B is loaded and transposed on-the-fly

A_tile[ty, tx] = A_global[global_row + ty, global_k + tx]

B_tile[tx, ty] = B_global[global_k + ty, global_j + tx] # Indices are swapped

This “load-and-transpose” maneuver alters the on-chip computation. The calculation for an element of the partial product is no longer a dot product between a row of A_tile and a column of B_tile. Instead, using the transposed on-chip B_tile, the formula becomes:

In this formulation, a warp of threads computing different j values for a fixed i will access a row from A_tile and a row from the on-chip B_tile. Both are conflict-free access patterns. This single strategy solves both the HBM coalescing requirement and the SRAM bank conflict problem.

Load-and-Transpose Operation (Thread tx, ty)

Reads row-wise from HBM, writes column-wise to SRAM

Global Memory (HBM) Shared Memory (SRAM)

+-------------------------+ +-----------------------+

| B[k_base+ty, j_base+tx] | -----> | B_tile[tx, ty] |

+-------------------------+ +-----------------------+

Result: HBM reads are coalesced, SRAM reads are conflict-free.

E. The On-Chip Compute Phase: Increasing Arithmetic Intensity

With data staged in Shared Memory, the block performs the computation. The goal is to maximize data reuse from this fast on-chip memory. We will analyze two strategies for structuring this on-chip computation.

Strategy 1: One thread computes one output

The simplest approach maps one output element to one thread. A BLOCK_DIM x BLOCK_DIM thread block computes a TILE_DIM x TILE_DIM data tile, where BLOCK_DIM and TILE_DIM are equal. This strategy is conceptually similar to Kernel 3 in Boehm’s post, which introduces Shared Memory caching.9 The hardware limit of 1024 threads per block constrains BLOCK_DIM to be at most 32. Thread (tx, ty) is responsible for a single output element C_partial[ty, tx].

# Action for a single thread (tx, ty) where BLOCK_DIM = TILE_DIM

c_accumulator = 0.0

for k in range(TILE_DIM):

c_accumulator += A_tile[ty, k] * B_tile[tx, k]

The arithmetic intensity for this strategy is TILE_DIM / 4.

- Total FLOPs: The block performs

2 * TILE_DIM^3FLOPs. - Total Bytes Accessed (HBM): The block loads two data tiles, totaling

8 * TILE_DIM^2bytes. - Arithmetic Intensity (AI):

(2 * TILE_DIM^3) / (8 * TILE_DIM^2) = TILE_DIM / 4FLOPs/Byte.

With TILE_DIM limited to 32, the maximum AI is 32 / 4 = 8. This is insufficient to cross the A100’s ridge point of ~13. The kernel remains memory-bound.

Strategy 2: One thread computes multiple outputs

To increase AI, we must increase TILE_DIM without increasing the number of threads. This requires decoupling the data tile size from the thread block size. We assign more work to each thread. This strategy corresponds to the goal of Kernel 5 in Boehm’s post.

A 16x16 thread block (BLOCK_DIM = 16, 256 threads) can compute a 64x64 data tile (TILE_DIM = 64). Each thread now computes a 4x4 sub-tile of the output. This requires TILE_DIM=64 to not exceed Shared Memory capacity.10

# A thread computes a 4x4 output sub-tile

# TILE_DIM = 64, BLOCK_DIM = 16

c_regs = [[0.0] * 4 for _ in range(4)]

a_regs = [0.0] * 4

b_regs = [0.0] * 4

for k in range(TILE_DIM):

# Load a sliver of A_tile and B_tile into registers

for i in range(4): a_regs[i] = A_tile[thread_row*4 + i, k]

for j in range(4): b_regs[j] = B_tile[thread_col*4 + j, k]

# Compute outer product in registers, accumulating into c_regs

for i in range(4):

for j in range(4):

c_regs[i][j] += a_regs[i] * b_regs[j]

The AI calculation remains TILE_DIM / 4. With TILE_DIM = 64, the AI is 64 / 4 = 16 FLOPs/Byte. This exceeds the A100’s ridge point. The kernel is now compute-bound.

A compute-bound kernel’s runtime is limited by the SM’s arithmetic throughput. This does not guarantee high absolute performance. A kernel can be compute-bound but still be slow if its FLOPs are inefficient (e.g., using scalar FP32 math instead of specialized hardware like Tensor Cores11) or if the GPU operates below its peak clock speed due to power limits.

The inner loop in the code above can be further optimized. A thread loads four separate float values from A_tile into a_regs. It can instead issue a single instruction to load a 16-byte float4 vector. This vectorized load from Shared Memory reduces the number of instructions issued for on-chip data movement, improving the efficiency of the compute phase. This corresponds to the on-chip vectorization refinement used in Kernel 6 of Boehm’s post.

A Final Consideration: Tile Quantization

If matrix dimensions are not multiples of the tile size, the kernel launches extra blocks that perform wasted computation.

To cover an M x N matrix with TILE_M x TILE_N tiles, the GPU launches a grid of ceil(M/TILE_M) x ceil(N/TILE_N) thread blocks. Tiling a 65x65 matrix with 32x32 tiles requires a ceil(65/32) x ceil(65/32) = 3x3 grid of blocks. The kernel’s logic is fixed; each block is programmed to perform the arithmetic for a full 32x32 tile.

Columns 0-31 Columns 32-63 Columns 64-95

+-----------------+-----------------+-----------------+

R 0 | | | |

o-31| Block 0,0 | Block 0,1 | Block 0,2 |

w | (Full work) | (Full work) | (Wasted work) |

s | | | |

+-----------------+-----------------+-----------------+

R 32| | | |

o-63| Block 1,0 | Block 1,1 | Block 1,2 |

w | (Full work) | (Full work) | (Wasted work) |

s | | | |

+-----------------+-----------------+-----------------+

R 64| | | |

o-95| Block 2,0 | Block 2,1 | Block 2,2 |

w | (Wasted work) | (Wasted work) | (Wasted work) |

s | | | |

+-----------------+-----------------+-----------------+

According to NVIDIA, “While libraries ensure that invalid memory accesses are not performed by any of the tiles, all tiles will perform the same amount of math.” My understanding of why this happens (I’m happy to be corrected): Boundary blocks perform wasted work because the kernel explicitly pads the data. Threads assigned to load elements from outside the matrix bounds are prevented from doing so by a guard condition. Instead, they write zero to their location in the on-chip Shared Memory tile. The arithmetic loops are not shortened. The kernel’s logic is uniform across the tile. All threads in a warp execute the same multiply-add instructions. A thread whose data corresponds to a padded zero still executes the instruction; it just performs a useless computation, such as C += A * 0. The hardware resources are used, but the work is discarded.

Additional Performance Considerations

We have made our kernel compute-bound. Its performance is now limited by the speed of its on-chip arithmetic. However, the kernel can still be made faster by managing additional aspects of the hardware. The following are three such considerations. There are others, but I’m not quite advanced enough to write about them, yet. See Boehm’s post for others.

Occupancy and Latency Hiding

A warp stalls when it executes a long-latency instruction, such as a read from Global Memory. It cannot execute its next instruction until the data arrives, which can take hundreds of clock cycles. During this time, the SM’s compute units would be idle if the stalled warp were the only work available.

The SM hides this latency by executing other work. It can hold multiple thread blocks concurrently, creating a pool of resident warps. When one warp stalls, the SM’s hardware scheduler instantly switches to a different warp from this pool that is ready to run. This mechanism is called latency hiding.

+-------------------------------------------------------------------+

| Streaming Multiprocessor (SM) |

| |

| [Block A] [Block B] |

| - Warp A1 (Ready) - Warp B1 (Ready) |

| - Warp A2 (Stalled -> waiting on HBM) |

| | | |

| +------------------v------------------+ |

| [ Pool of Ready-to-Run Warps ] |

| [ A1, B1 ] |

| | |

| +-------v-------+ |

| | SM Scheduler | --> [Execute instructions] |

| +---------------+ |

| |

+-------------------------------------------------------------------+

Occupancy is the ratio of active warps on an SM to the maximum number it can support. High occupancy gives the scheduler a larger pool of warps to choose from. This increases the likelihood that it can find a ready warp to execute at any given cycle, keeping the compute units active.

This leads to a trade-off between the resources used per block and the number of blocks that can reside on an SM. The two extremes can be visualized as follows:

+------------------------------------+ +----------------------------------------------+

| SM with High AI, Low Occupancy | | SM with Low AI, High Occupancy |

| | | |

| +--------------------------------+ | | +----------+ +-----------+ +-----------+ |

| | Block 0 (uses 64KB SMEM) | | | | Block 0 | | Block 1 | ... | Block N | |

| | TILE_DIM=128 -> High AI | | | | (8KB SMEM) | (8KB SMEM)| | (8KB SMEM)| |

| +--------------------------------+ | | +----------+ +-----------+ +-----------+ |

| | | |

| --> Low # of resident blocks. | | --> High # of resident blocks. |

| --> Small pool of warps for | | --> Large pool of warps for |

| latency hiding. | | latency hiding. |

+------------------------------------+ +----------------------------------------------+

We tune the kernel’s resource usage to balance the benefit of high AI against the necessity of sufficient occupancy. The primary levers for this tuning are the thread block dimensions (BLOCK_DIM), the amount of Shared Memory allocated per block (determined by TILE_DIM), and the number of registers used per thread.12

Avoiding Thread Divergence

A conditional branch (if-else) where threads in a warp disagree on the outcome causes thread divergence.13 When this occurs, the hardware resolves the divergence by executing the different code paths serially. First, threads that take the if path execute it while the others are inactive. Then, the roles are reversed for the else path.

# A warp of 32 threads encounters an `if` statement:

if (thread_id < 16)

# Path A

else

# Path B

Execution Timeline:

Time ->

+------------------------------------------------------------------+

| Warp Execution |

| |

| Cycle 1: Path A is executed. |

| - Threads 0-15: Active, execute Path A code. |

| - Threads 16-31: Inactive, masked off. |

| |

| Cycle 2: Path B is executed. |

| - Threads 0-15: Inactive, masked off. |

| - Threads 16-31: Active, execute Path B code. |

| |

| Result: Two cycles are required instead of one. |

| Effective throughput is halved. |

+------------------------------------------------------------------+

This serialization doubles the execution time of the divergent code, halving the warp’s effective throughput. We avoid this cost by writing branchless code in performance-critical sections, using primitives like min and max instead of if-else.

Quantization

Quantization reduces precision of elements of our tensor, from, say FP32 to FP16 or BFP16. This has two effects. First, it reduces the memory needed to store each element, for example, by 2. Thus, we can transfer twice as many elements per second from global memory to shared memory. This increases AI by 2.

Second, GPUs, such as the A100, can operate faster on lower precision elements. For example, on an A100, 312 TFLOPS are achievable for certain FP16 operations, whereas FP32 operations are limited to 19.5 TFLOPS. Thus, theoretically we can speedup computation by a factor of 16.

Quantization can therefore move us up and to the right on the Roofline plot.

-

I learned from this post that one should take these “peak” numbers with a grain of salt, since power limits effect clock speed. ↩

-

The (peak) 19.5 TB/s figure for shared memory is derived as follows: $32 \text{ banks} \times 4 \text{ bytes per bank cycle} \times 1.41 \text{ GHz clock} \times 108 \text{ SMs} = 19.5 \text{ TB/s}$. Note that read and write are on independent ports, so the bandwidth is doubled in some sense. ↩

-

Apparently, these numbers are not released by NVIDIA. I didn’t bother tracking them down because the main point is that this is the fasted memory to read and write from. ↩

-

Modern GPUs (e.g., A100s) actually have Independent Thread Scheduling (ITS). See page 14 and beyond of this document for a nice introduction to ITS. In particular, the authors write that in ITS, “[e]ach thread is given its own, individual program counter, meaning that theoretically, each thread can store its own unique instruction that it wants to perform next. The execution of threads still happens in warps, this has not changed. It is not possible for threads in a warp to perform different instructions in the same cycle. However, a warp may now bescheduled to progress at any of the different program counters that the threads within it are currently holding. Furthermore, ITS provides a“progress guarantee”: eventually, over a number of cycles, all individual program counters that the threads in a warp maintain will be visited. Thismeans that if, for instance, the execution has diverged and two branches, both are guaranteed to be executed sooner or later.” While ITS allows one to write correct branching code a bit more easily than in older architectures, one should still strive to write code where warps operate as much as possible in lockstep, so we can take advantage of the maximal number of parallel lanes. ↩

-

This also assumes that we can load the entire column of the matrix into the set of registers belonging to a single thread. Since each SM has a register file of size 256 KB, this not possible for large matrices. ↩

-

See Making Deep Learning Go Brrrr From First Principles for more on overhead. ↩

-

BTW as pointed out by Horace He), this effect explains why padding a model’s vocabulary size can improve performance. ↩

-

With Independent Thread Scheduling, we cannot rely on implicit synchronization between threads in a warp. Correctness requires an explicit barrier like

__syncthreads()to guarantee that, for example, all data is written to Shared Memory before any thread reads it. ↩ -

This post solves HBM coalescing (like Kernel 2) and SRAM bank conflicts (which Boehm addresses for matrix A in Kernel 6) simultaneously by transposing the B tile on-chip. Therefore, this strategy is a bit more advanced than the one in Boehm’s Kernel 3, which introduces Shared Memory but still suffers from bank conflicts. ↩

-

Two

64x64tiles of 4-byte floats require2 * 64 * 64 * 4 = 32768bytes (32 KB) of Shared Memory. This fits within the 48 KB available to a block on an A100 under default configuration. A kernel can request more (up to 100KB), but this reduces the number of thread blocks that can run concurrently on an SM, a trade-off with occupancy. ↩ -

Tensor Cores are specialized hardware units on NVIDIA GPUs that execute matrix-multiply-accumulate (

D = A@B + C) operations. On Ampere GPUs, they accelerateFP32inputs by first rounding them to the TensorFloat-32 (TF32) format. TF32 uses the same 8-bit exponent asFP32but only a 10-bit mantissa, matching the precision ofFP16. The internal accumulation is performed inFP32. This process is the default for some operations in deep learning frameworks, offering a speedup at the cost of lower precision. For maximum throughput, Tensor Cores also operate on formats likeFP16,BF16, andINT8. BTW how can they get away with calling TF32, TF32, when it is lossy! ↩ -

The SM has a finite physical Register File (e.g., 65,536 32-bit registers on an A100). The total number of registers a block requires is

(threads per block) * (registers per thread). The SM can only host as many concurrent blocks as can fit within its Register File and Shared Memory capacity. Therefore, using more registers per thread reduces the number of blocks that can be resident, lowering occupancy. The compiler allocates registers to warps in fixed-size chunks, so, e.g., a kernel requesting 33 registers per thread may be allocated 40, further impacting this resource calculation. ↩ -

Independent Thread Scheduling does not eliminate the cost of divergence. While each thread has its own Program Counter (PC), a register pointing to the next instruction, the warp is still managed by a scheduler that can only issue an instruction from a single address per cycle. When PCs within a warp diverge, the scheduler must serially execute each unique path, leaving all other threads idle. The simplified

if-elsemodel in the main text is thus still an accurate description of thread divergence since serialization diminishes the warp’s parallelism. ↩

Basic idea behind flash attention (V1)

The naive implementation of self-attention on a GPU is slow, not because of the number of floating-point operations (FLOPs), but because of memory accesses. Flash attention is a way to reduce memory access while still computing exact attention.

Recall the attention operation is defined as:

\[S = QK^T, \quad P = \text{softmax}(S), \quad O = PV\]Here, for a sequence of length $N$ and a head dimension $d$, the query $Q$, key $K$, and value $V$ matrices are size $N \times d$. The attention matrix $S$ and the probability matrix $P$ are both size $N \times N$.

Why is the naive implementation slow? A GPU has a memory hierarchy. A small amount of very fast on-chip memory (SRAM) is available to the compute cores. A much larger, but slower, pool of memory (HBM, or High-Bandwidth Memory) is off-chip. Most operations in a deep learning model are “memory-bound,” meaning their speed is limited by the time it takes to move data between HBM and SRAM, not by the arithmetic computations performed in SRAM. I recommend reading this blog post by Horace He for a quick primer on compute, memory, and overhead in deep learning.

The standard implementation of attention materializes the $N \times N$ matrices $S$ and $P$ in HBM. This involves:

- Reading $Q$ and $K$ from HBM.

- Computing $S=QK^T$ and writing $S$ back to HBM.

- Reading $S$ from HBM.

- Computing $P=\text{softmax}(S)$ and writing $P$ back to HBM.

- Reading $P$ and $V$ from HBM.

- Computing $O=PV$ and writing $O$ back to HBM.

For long sequences ($N \gg d$), the $O(N^2)$ memory reads and writes for $S$ and $P$ dominate the runtime.

The problem is the HBM traffic. Let B be the bytes per element. The implementation writes and then reads the $N \times N$ matrix $S$ (totaling $2N^2B$ bytes), and then writes and reads the $N \times N$ matrix $P$ (another $2N^2B$ bytes). These four operations, which transfer data to and from the large but slow HBM, dominate the $O(NdB)$ traffic from reading the initial inputs $Q, K, V$ and writing the final output $O$. The total HBM access is thus $O(N^2 + Nd)$ bytes.

Flash attention (V1)

FlashAttention computes the same attention output $O$ without writing the full $N \times N$ matrices $S$ and $P$ to HBM. It achieves this by restructuring the computation using two techniques: tiling and online softmax. The idea is to perform the entire attention calculation in smaller blocks, keeping all intermediate products within the fast on-chip SRAM until the final block of the output $O$ is ready. This post, again by Horace, is useful for seeing why tiling is a good idea.

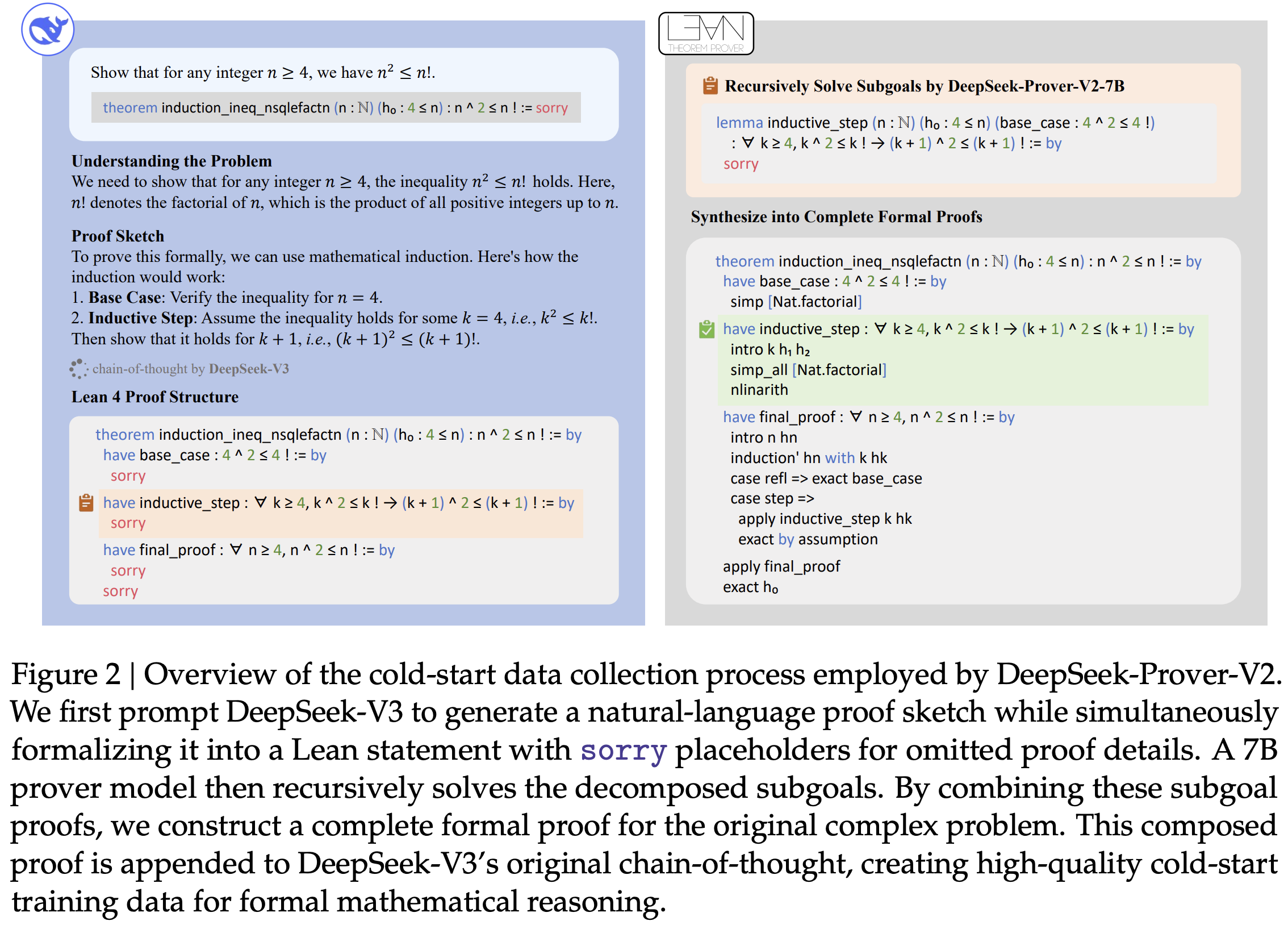

Figure 1 from the FlashAttention paper.

Figure 1 from the FlashAttention paper.

NOTE: this post is about the original FlashAttention paper. There are now three versions of FlashAttention, but we’ll stick with V1 for simplicity.

Tiling

The computation is broken into blocks. The matrices $Q, K, V$ are partitioned into smaller sub-matrices. The algorithm iterates through blocks of $K$ and $V$, loading them into SRAM. Within this outer loop, it iterates through blocks of $Q$.

A simplified view of the loops:

// O is the final output matrix, initialized to zero

// l, m are statistics for the online softmax, initialized

for each block K_j, V_j in K, V:

Load K_j, V_j into fast SRAM

for each block Q_i in Q:

Load Q_i, O_i, l_i, m_i into fast SRAM

// Core computation, all on-chip

Compute S_ij = Q_i @ K_j^T

Compute P_ij = softmax(S_ij) // (This is a simplification, see next section)

Compute O_ij = P_ij @ V_j

// Update the output block O_i with the new result

Update O_i using O_ij and softmax statistics

// Write the updated O_i, l_i, m_i back to HBM

Write O_i, l_i, m_i to HBM

This tiled structure changes the memory access pattern. The algorithm iterates through $K$ and $V$ in blocks, reading each element from HBM only once. The critical part is the inner loop. For each block of $K_j$ loaded into SRAM, the algorithm must iterate through all blocks of $Q$ to compute the corresponding updates to the output. This means the algorithm makes multiple passes over the $Q$ matrix. The number of passes is determined by the number of blocks in $K$, which is $T = N/B$, where $B$ is the block size. Since the on-chip SRAM of size $M$ must hold a block of $K$ (size $B \times d$), the block size $B$ is limited to $O(M/d)$. This leads to $T = O(Nd/M)$ passes. Each pass reads the entirety of $Q$ (and reads/writes blocks of $O$), resulting in HBM traffic of $O(Nd \times T) = O(N^2 d^2 / M)$ bytes. This quantity is substantially smaller than the $O(N^2)$ traffic of standard attention.

Example:

To make this concrete, consider an NVIDIA A100 GPU. Each of its Streaming Multiprocessors has 192KB of SRAM. A single kernel will use a portion of this, let’s assume a working SRAM size of M_bytes = 128KB. If we use bfloat16 precision (2 bytes per number), the effective SRAM size in elements is M = 128 * 1024 / 2 = 65,536 elements. For a typical head dimension d=64, $d^2 = 4096$. The reduction factor in memory accesses is approximately $M/d^2 = 65536 / 4096 = 16$. FlashAttention performs roughly 16 times fewer HBM accesses than the standard implementation. For $d=128$, the factor is $M/d^2 = 65536 / 16384 = 4$.

Online Softmax

The softmax function is applied row-wise. For a row $x_i$ of the attention score matrix $S$, the output is $\text{softmax}(x_i)_j = \frac{e^{x_{ij}}}{\sum_k e^{x_{ik}}}$. The denominator requires a sum over the entire row, which seems to prevent block-wise computation.

The “online softmax” algorithm solves this. It computes the exact softmax by maintaining two running statistics for each row $i$: the maximum value seen so far ($m_i$) and the sum of the exponentials of the scaled values ($l_i$). For numerical stability, softmax is computed as:

\[m(x) = \max_j(x_j), \quad l(x) = \sum_j e^{x_j - m(x)}, \quad \text{softmax}(x)_j = \frac{e^{x_j - m(x)}}{l(x)}\]When processing the $j$-th block of a row $x_i$, which we denote $x_{i,j}$, we can update the statistics and the output $O_i$ as follows:

- Compute the local statistics for the current block: $m_{i,j} = \max_k(x_{ik})$ and $l_{i,j} = \sum_k e^{x_{ik} - m_{i,j}}$.

- Compute the new global maximum: $m_i^{\text{new}} = \max(m_i^{\text{old}}, m_{i,j})$.

-

Update the output value using the old and new statistics:

\[O_i^{\text{new}} = \frac{1}{l_i^{\text{new}}} \left( l_i^{\text{old}} e^{m_i^{\text{old}} - m_i^{\text{new}}} O_i^{\text{old}} + l_{i,j} e^{m_{i,j} - m_i^{\text{new}}} O_{i,j} \right)\]where $O_{i,j}$ is the attention output computed using only the current block of $K$ and $V$, and $l_i^{\text{new}}$ is the appropriately rescaled sum.

It’s straightforward to see that by storing and updating these two scalars ($m_i, l_i$) per row, Flash attention can produce the exact output of the softmax operator without needing the whole row at once.

The Backward Pass and Recomputation

The backward pass of attention requires the gradients $\frac{\partial L}{\partial Q}, \frac{\partial L}{\partial K}, \frac{\partial L}{\partial V}$. Computing these requires the matrix $P$ from the forward pass. A standard implementation would read the saved $N \times N$ matrix $P$ from HBM, incurring the same memory bottleneck.

Flash attention avoids this by not storing $P$ at all. Instead, during the backward pass, it recomputes the necessary blocks of the attention matrix $P$ as needed. It does this by loading the required blocks of $Q$, $K$, and $V$ from HBM back into SRAM. The only values saved from the forward pass are the final output $O$ $(N \times d)$ and the online softmax statistics ($m, l$).

This introduces more FLOPs, as parts of the forward pass are re-done. However, since the operation is memory-bound, the time spent on these extra FLOPs is insignificant compared to the time saved by avoiding a massive read from HBM.

Using min cut to determine activation recomputation strategy

Standard automatic differentiation saves many intermediate “activations” from the forward pass to be reused during the backward pass. This can be memory-intensive. Recomputation, or activation checkpointing, is a technique that saves memory by re-calculating these activations during the backward pass instead of storing them. While this suggests a trade-off between memory and compute, a fusing compiler like NVFuser changes the calculation.

For a chain of pointwise operations (e.g., add, relu, cos), a fusing compiler can execute them in a single GPU kernel. The performance of this kernel is limited by memory bandwidth, the speed of reading from and writing to the GPU’s global memory (HBM), not by the arithmetic operations themselves. This means that recomputing a sequence of fused pointwise operations is nearly free, provided the initial input to the sequence is available.

Thus, the problem of choosing which activations to save in the forward pass is about minimizing memory traffic, not necessarily minimizing FLOPs. I came across a very clever strategy for doing this in a blog post by Horace He. There, Horace frames the problem as a “min cut” on the computation graph and sees some nice improvements. I recommend reading the post for details. Here I’ll mainly work out some details that I was confused about in Horace’s original post. 1

Backward and forward passes

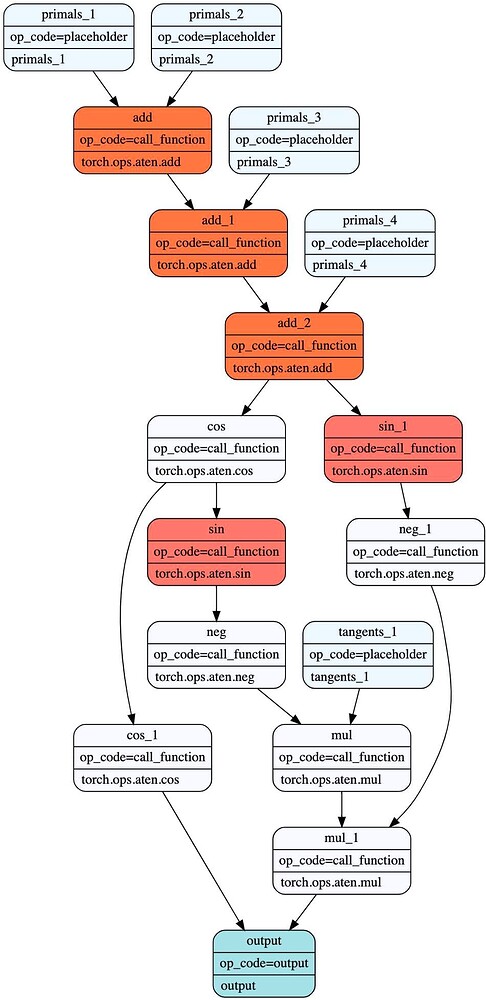

Consider the function f(a,b,c,d) = cos(cos(a+b+c+d)). Let z = a+b+c+d. The forward pass computes z, then cos(z), then y = cos(cos(z)).

The chain rule dictates what the backward pass needs. To compute the gradients, it requires the intermediate values, or “activations,” z and cos(z) to calculate sin(z) and sin(cos(z)):

dy = incoming ∂L/∂y

dcos = dy * (-sin(x.cos()))

dx = dcos * (-sin(x))

da,db,dc,dd = dx

A standard autograd system, as shown in the first figure, would save the z and cos(z), require 2 reads and 2 writes. This ensures the values are available for the backward pass.

This is a safe but suboptimal strategy. The total data transferred between the forward and backward passes for this strategy is the size of two tensors. We can do better. The key insight is that if we save only z (the output of add_2), we can recompute cos(z) on-the-fly inside the backward pass’s fused kernel. This halves the memory traffic.

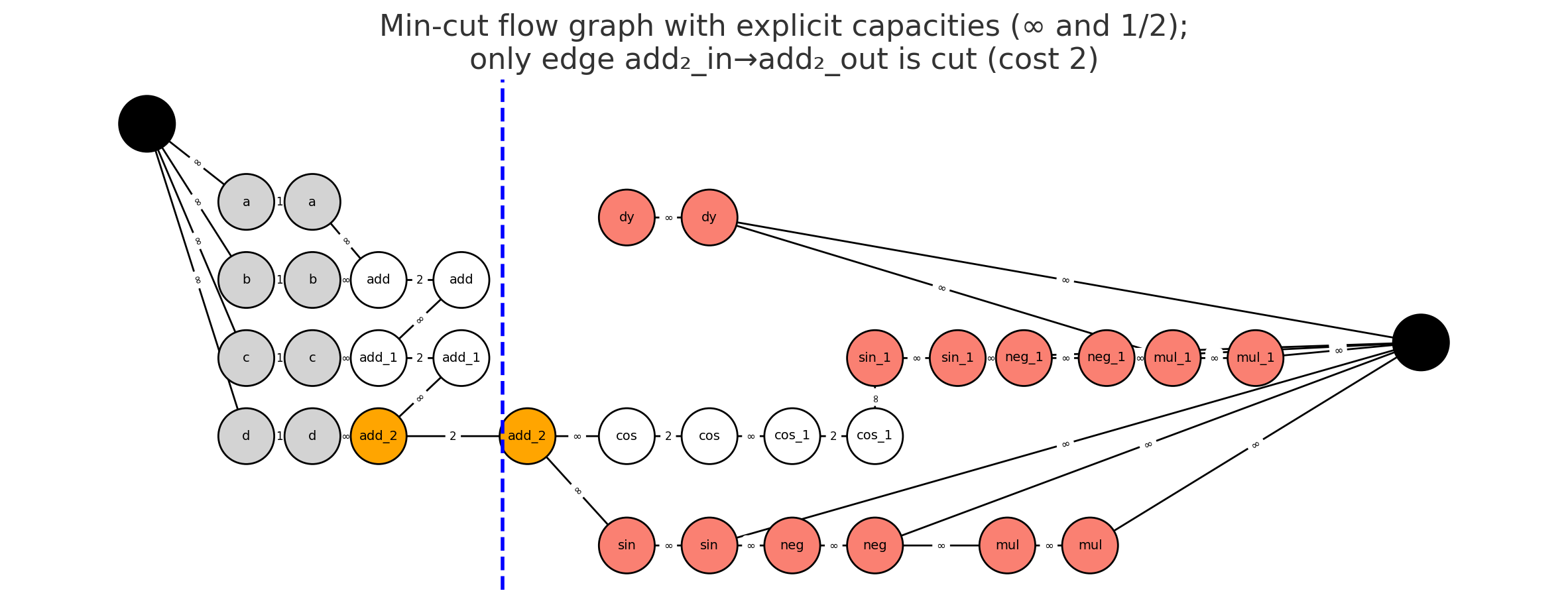

The Min-Cut Formulation

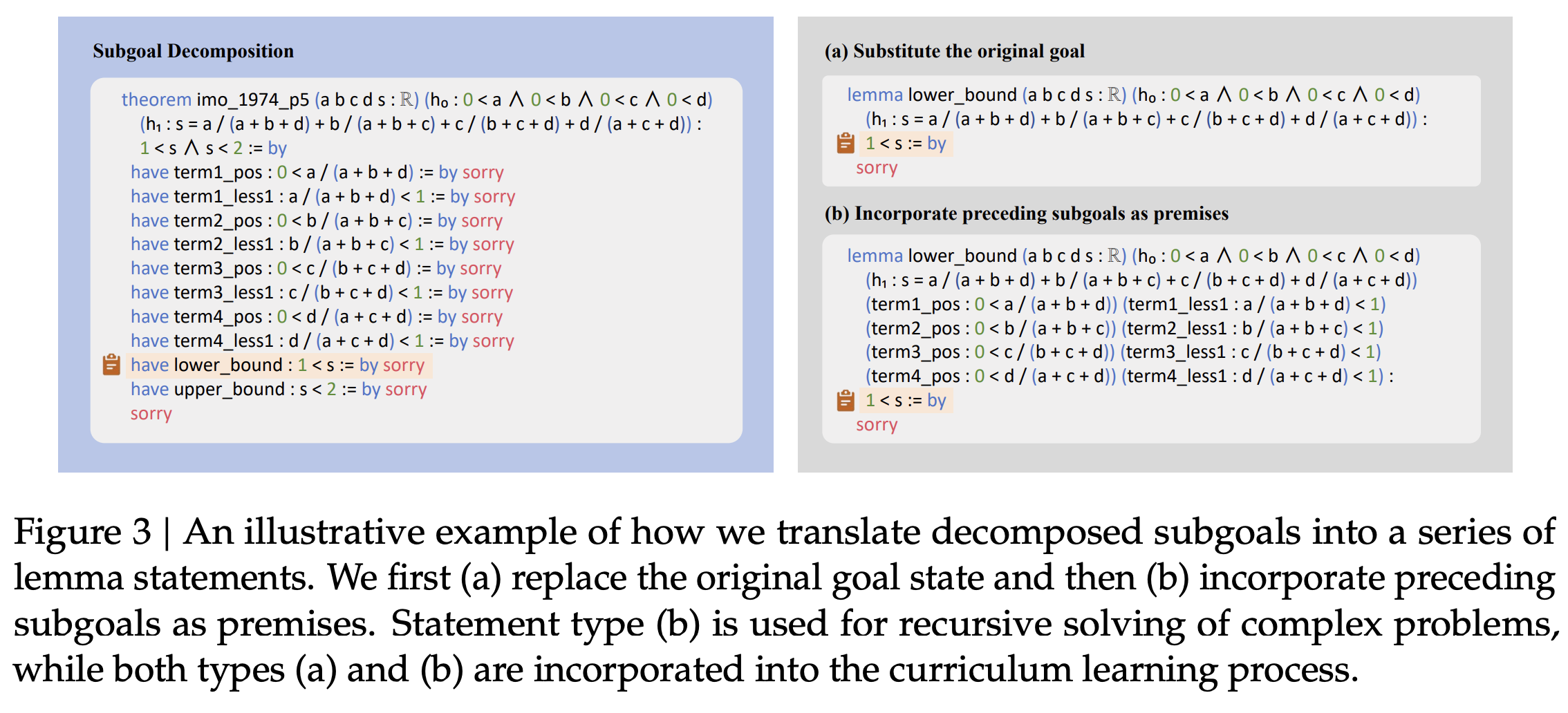

To find this optimal set of checkpointed tensors automatically, we model the problem as finding a minimum cut in a graph.

-

The Graph: We construct a graph representing the full forward and backward computation. We add a virtual source,

SRC, and a virtual sink,SNK. - The Source and Sink Sets:

- The source set represents the beginning of the forward pass. The

SRCnode is connected to all initial inputs of the model (e.g.,a, b, c, d). - The sink set represents the operations that must run in the backward pass. An operation must be in the backward pass if it depends on the incoming gradient. This set of operations is called the tangent closure. In the figures, these are the red nodes. The

SNKnode is connected to all nodes in the tangent closure.

- The source set represents the beginning of the forward pass. The

-

Edges and Costs: The problem is transformed into a standard edge-cut problem via node splitting. Every operation node

vis split into two nodes,v_inandv_out, connected by an edge.- Split Edges (

v_in -> v_out): These are the only edges with a finite cost, or. The cost of this edge is the cost of checkpointing the tensorv.Cost = 2 * B(v)for an intermediate activation. This cost represents onewriteto global memory and onereadfrom it.Cost = 1 * B(v)for a forward pass input that already exists in global memory. This cost represents just oneread. Here,B(v)is the size of the tensor in bytes.

- Data-Flow Edges (

u_out -> v_in): Edges representing the flow of data between operations have infinite cost. This models our assumption that recomputation within a fused kernel is free.

- Split Edges (

-

The Cut: The min-cut algorithm finds the set of edges with the minimum total cost that must be severed to separate

SRCfromSNK. Because only the split edges have finite cost, the algorithm will only sever those.Mathematically, the algorithm solves for the

\[\text{minimize} \sum_{(u \to v) \in \text{cut}} \text{cost}(u \to v)\]s-tcut 2 with minimum total cost:The sum is over all edges in the cut. Since data-flow edges have infinite cost, a minimal cut will only ever consist of the finite cost”split edges.” The problem is thus equivalent to finding the cheapest set of split edges to sever.

An edge

v_in -> v_outbeing cut means we have decided to pay its cost and checkpoint the tensorv. The nodes whose edges are cut are colored orange. Nodes on theSRCside of the cut are inputs. Nodes on theSNKside are either part of the mandatory tangent closure (red) or are operations that will be recomputed (white).

For our example, the algorithm compares the costs of all possible cuts:

- Cut at

add_2: Severs theadd_2_in -> add_2_outedge. Cost =2B. - Cut at

cosandcos_1: Severs two edges. Cost =2B + 2B = 4B. - Cut at the inputs: Severs four edges. Cost =

1B + 1B + 1B + 1B = 4B.

The minimum cost is 2B, corresponding to cutting only the add_2 edge. This means the optimal strategy is to checkpoint add_2 (orange), recompute cos and cos_1 (white), and feed the results to the tangent closure (red).

Thus, the min-cut formulation finds the checkpointing strategy that minimizes memory traffic under the fused computation model. These min-cut problems can be solved efficiently with standard max-flow algorithms.

-

See also this paper, which Horace pointed me to. ↩

-

An

s-tcut is a set of edges whose removal disconnects all paths fromSRCtoSNK. ↩

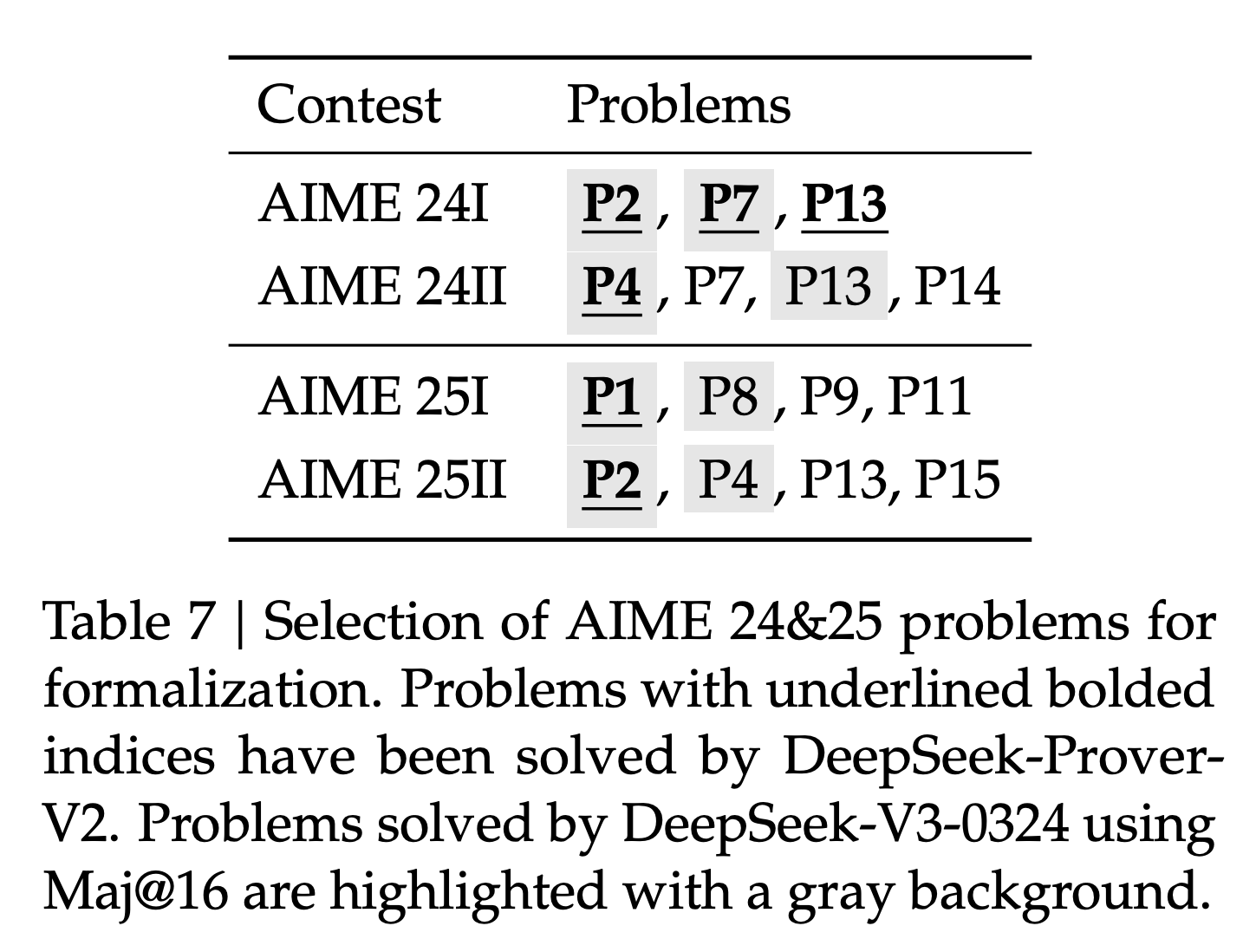

Linear layouts, triton, and linear algebra over F_2

@typedfemale posted the paper “Linear Layouts: Robust Code Generation of Efficient Tensor Computation Using F2” on X (link to tweet). It looked interesting. I tweeted:

“I will actually read this. it’s always the very interesting things that use lin alg over finite fields. i remember in undergrad when i first saw a “real” application of it while learning the general number field sieve.”

I struggled with this paper. This post is the result of that effort. Hopefully, it is useful.

My goal here is to just give a sense of their main idea: using linear algebra over the finite field $F_2$ to manage how tensor data is arranged for computation on GPUs. This is outside my usual area of optimization and machine learning, but anyway it looked like a fun read.

1. GPU and Tensor Layouts

Deep learning models use many tensor computations. GPUs accelerate these computations. The specific arrangement of tensor data on the GPU is called a “tensor layout.” This layout dictates, for example, which register within a specific thread (itself part of a GPU warp, a group of threads executing in lockstep) holds which piece of tensor data for an operation. This layout directly affects performance.

Current methods for defining and managing tensor layouts can apparently be complex.1 They can be specific to particular hardware or operations. This makes it difficult to build systems that are both flexible for different models and hardware, and also consistently fast and error-free. Incorrect or inefficient layouts lead to slow memory access, underused compute units, and slower models.

Triton is a language and compiler, originally developed by OpenAI, for writing custom code segments that run on GPUs, known as GPU kernels. People who train like to write performant ML code use Triton to create these kernels, for example, to fuse multiple operations (like a matrix multiplication followed by an activation function) into a single GPU kernel. The “Linear Layouts” paper aims to improve how Triton’s compiler system handles these tensor layouts.

2. Linear Layouts via Linear Algebra over $\mathbb{F}_2$

The paper “Linear Layouts: Robust Code Generation of Efficient Tensor Computation Using $\mathbb{F}_2$” defines a tensor layout using linear algebra over the finite field $\mathbb{F}_2 = \{0, 1\}$. Each layout is a matrix $A$ with entries in $\mathbb{F}_2$. This matrix $A$ specifies a linear map.

For a distributed layout, this map assigns tensor data to specific hardware processing units and their local storage. The matrix $A$ maps an identifier for a hardware resource to the coordinates of a logical tensor element.

- The input to this map (the domain) is a vector $v \in \mathbb{F}_2^n$. The bits of $v$ identify a unique hardware slot. This vector is a concatenation of bit vectors representing, for example, a register index $v_{\text{reg}}$, a thread index $v_{\text{thr}}$, and a warp index $v_{\text{wrp}}$, so $v = [v_{\text{reg}} | v_{\text{thr}} | v_{\text{wrp}}]$.

- The output of the map (the codomain) is a vector $w \in \mathbb{F}_2^m$. The bits of $w$ are the binary representation of the coordinates of an element in the logical tensor. For a 2D tensor

Tensor[i,j], $w$ would be formed by concatenating the binary representations of the integer indices $i$ and $j$, e.g., $w = [\text{bits}(i) | \text{bits}(j)]$. - The mapping is $w = Av$. All matrix and vector operations use $\mathbb{F}_2$ arithmetic, where addition is XOR and multiplication is AND.

This matrix $A$ dictates which hardware slot $v$ is responsible for which logical tensor element $w$. The authors state this system can represent existing layouts, such as those in Triton, and define new ones because these mappings from hardware bit identifiers to logical coordinate bit identifiers can be expressed as such matrices.

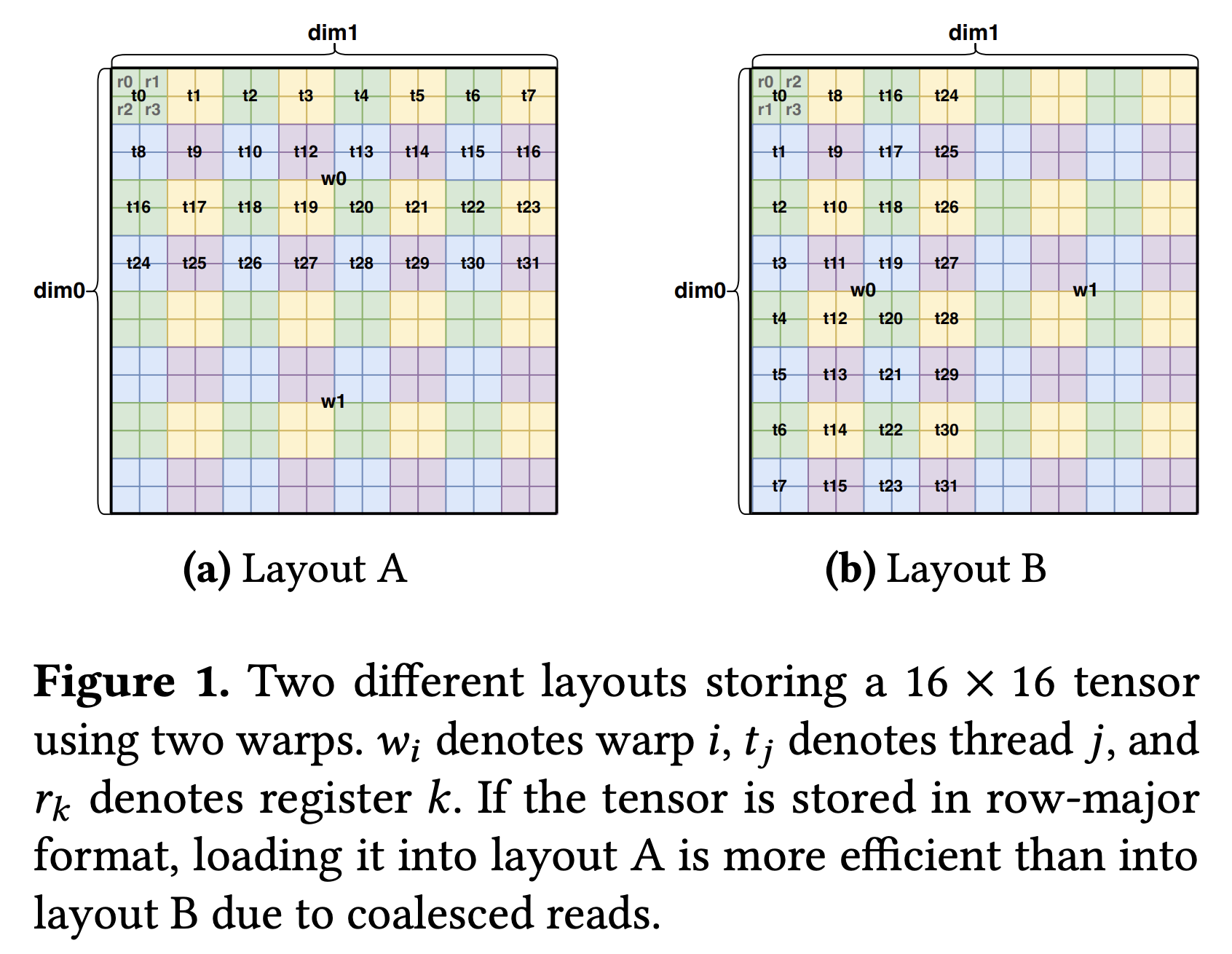

Example: Layout A from Figure 1 (Page 4 of the Paper)

Figure 1a in the paper illustrates two distributed layouts.

Let’s look into Layout A, which can be represented by a $8 \times 8$ matrix $A$ with entries in $\mathbb{F}_2$. This matrix maps an 8-bit hardware ID $v$ to an 8-bit logical coordinate $w$ for a $16 \times 16$ tensor.

-

Input:

\[v = [v_0(\text{reg}_0) \, v_1(\text{reg}_1) \,|\, v_2(\text{thr}_0) \, v_3(\text{thr}_1) \, v_4(\text{thr}_2) \, v_5(\text{thr}_3) \, v_6(\text{thr}_4) \,|\, v_7(\text{wrp}_0)]^T\](Bit $v_k$ is the $k$-th component of $v$; labels like $\text{reg}_0$ indicate conceptual roles).

-

Output:

\[w = [w_0(i_0) \, w_1(i_1) \, w_2(i_2) \, w_3(i_3)\, |\, w_4(j_0) \, w_5(j_1) \, w_6(j_2) \, w_7(j_3) ]^T\]The bits $w_0..w_3$ form the column index $j$ ($j_0$ is LSB). The bits $w_4..w_7$ form the row index $i$ ($i_0$ is LSB).

Note we’re slightly deviating from the paper. There, they define $w_{0:3} = j$ and $w_{4:7} = i$. I find it more natural to define $w_{0:3} = i$ and $w_{4:7} = j$. This means that $j = w_7\cdot 2^3 + w_6 \cdot 2^2 + w_5\cdot 2^1 + w_4\cdot 2^0$, etc

To construct the matrix $A$ frin Figure 1a for this linear layout, we observe that it’s mapping $w = Av$ has the following effect.

- Incrementing $v_0$ (respectively, $v_1$) increments $j$ by $1$ (respectively, $i$ by $1$). Thus, the least significant bit $w_4$ of $j$ (respectively $w_0$ of $i$) is precisely $v_0$ (respectively $v_1$).

- Incrementing $v_2$ increments $j$ by $2$. Thus, the second bit $w_5$ of $j$ is precisely $v_2$. Likewise, incrementing $v_5$ by $1$ increments $i$ by $2$, so the second bit $w_2$ of $i$ is precisely $v_5$.

Continuing with similar reasoning, we can show the following:

\[A = \begin{pmatrix} % v0 v1 v2 v3 v4 v5 v6 v7 0 & 1 & 0 & 0 & 0 & 0 & 0 & 0 \\ % w0 (i0) 0 & 0 & 0 & 0 & 0 & 1 & 0 & 0 \\ % w1 (i1) 0 & 0 & 0 & 0 & 0 & 0 & 1 & 0 \\ % w2 (i2) 0 & 0 & 0 & 0 & 0 & 0 & 0 & 1 \\ % w3 (i3) 1 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ % w4 (j0) 0 & 0 & 1 & 0 & 0 & 0 & 0 & 0 \\ % w5 (j1) 0 & 0 & 0 & 1 & 0 & 0 & 0 & 0 \\ % w6 (j2) 0 & 0 & 0 & 0 & 1 & 0 & 0 & 0 % w7 (j3) \end{pmatrix}\]From this matrix, you can verify that it indeed maps various hardware ids slots to the correct logical tensor elements. For example, the slot with the slot corresponding to register 0, thread 0, warp 0 maps to the logical tensor element Tensor[0,0], $i = w_{3:0} = 0000$ and $j = w_{7:4} = 0000$ (notice I reversed the bits). On other hand, the slot corresponding to register 1, thread 1, warp 0 maps to the logical tensor element Tensor[0,3], $i = w_{3:0} = 0000$ and $j = w_{7:4} = 0011$.

3. Memory Layouts and Shared Memory Interaction

Distributed layouts assign tensor elements to hardware compute units. Memory layouts describe how these logical tensor elements are arranged linearly within a memory segment, such as a GPU’s global or shared memory. A memory layout can be represented by a matrix $M_{\text{mem}}$ with entries in $\mathbb{F}_2$. This matrix $M_{\text{mem}}$ maps a vector representing a memory offset to the bits of the logical tensor coordinate stored at that offset:

logical_tensor_coordinate = M_mem * memory_offset_bits.

When we know the logical coordinate of a tensor element and need to find its location in memory, we use the inverse map, $M_{\text{mem_inv}}$. This matrix maps the bits of a logical tensor coordinate to the bits of its memory offset:

memory_offset_bits = M_mem_inv * logical_tensor_coordinate_bits.

A Simple Memory Layout: Row-Major Order

To understand memory access patterns, we first consider a basic row-major arrangement for a $16 \times 16$ tensor, Tensor[i,j]. The linear memory offset is offset = i * 16 + j (assuming unit-sized elements). Suppose the logical coordinate vector $w$ follows our convention: $w = [w_0(i_0) \dots w_3(i_3) | w_4(j_0) \dots w_7(j_3)]^T$. Here, $w_{0..3}$ are the bits of $i$ (LSB $i_0$ to MSB $i_3$), and $w_{4..7}$ are the bits of $j$ (LSB $j_0$ to MSB $j_3$). The memory offset $k$ is an 8-bit value $k = [k_0(\text{MSB}) \dots k_7(\text{LSB})]^T$. For row-major storage, the column index $j$ forms the lower-order bits of the offset ($k_{0..3}$), and the row index $i$ forms the higher-order bits ($k_{4..7}$).

The matrix $M_{\text{mem_inv}}: w \mapsto k$ that achieves this is an $8 \times 8$ permutation matrix:

This matrix $M_{\text{mem_inv}}$ takes $w=[\text{bits}(i) | \text{bits}(j)]$ and produces $k=[\text{bits}(j) | \text{bits}(i)]$, correctly placing $j$-bits into the lower part of $k$ and $i$-bits into the higher part.

GPU Shared Memory Structure

A GPU’s shared memory is a fast, on-chip memory. It is physically divided into multiple (e.g., 32) independent banks. Each bank contains many addressable lines (also called vector words or cache lines), typically the size of a wide hardware memory access (e.g., 128 bits). Each line stores multiple individual data elements (e.g., four 32-bit floats).

A specific data element within shared memory is pinpointed by three components:

bank_hw: Selects the physical bank.idx_hw: Selects the line (vector word) within that bank.vec_hw: Selects the specific element within that chosen line.

A useful way to visualize this memory is:

bank 0: [line 0, line 1, line 2, ..., line N-1]

bank 1: [line 0, line 1, line 2, ..., line N-1]

...

bank 31: [line 0, line 1, line 2, ..., line N-1]

Accessing different banks simultaneously is done in parallel (to my knowledge). Accessing the same bank simultaneously by different threads for different lines causes bank conflicts, serializing operations. On the other hand, accessing elements within the same line simultaneously can be performed in parallel; this is called vectorized access. Efficient memory access thus requires careful mapping of data to these (vec_hw, bank_hw, idx_hw) components.

The Swizzling Map s

The paper’s “optimal swizzling” algorithm constructs a specialized memory layout for shared memory, defined by an $\mathbb{F}_2$ matrix $s$. This map $s$ defines how the structured shared memory hardware components map to the logical tensor coordinate stored at that physical slot:

- Input (domain): A bit vector

v_sm = [vec_hw | idx_hw | bank_hw]representing a shared memory slot description. - Output (codomain): The logical tensor coordinate $w = [\text{bits}(i) | \text{bits}(j)]$ (using our convention for $w$) stored at that slot.

- Mapping: $w = s \cdot v_{\text{sm}}$.

The purpose of constructing this swizzling map $s$ is to arrange logical data across physical shared memory slots in a non-obvious (“swizzled”) manner. This design ensures that when threads (operating under a given distributed layout) need to access various logical tensor coordinates, the corresponding shared memory hardware slots (vec_hw, idx_hw, bank_hw) (found via $s^{-1}(w)$) are distributed to minimize bank conflicts and enable efficient vectorized reads and writes.

4. Linear Algebra and Layouts

A compiler must perform several tasks to generate efficient GPU code: it must arrange data for memory access, rearrange it for computation, and map these operations to specialized hardware instructions. Representing layouts as matrices allows the compiler to perform these tasks using linear algebra.

Layout Conversion Between Layouts Optimized for Memory and Compute

We can use matrix products to represent how a GPU kernel moves data between a layout optimized for memory access and one required by a compute unit.

Loading data from global memory is fastest when threads access contiguous blocks of memory, a pattern known as coalesced access. This suggests a simple, row-major layout. However, a specialized compute unit, like a Tensor Core, may require data to be presented in a completely different layout to perform its calculations. We can compute this conversion using matrix products.

Let layout $A$ be the source (e.g., memory layout) and $B$ be the destination (e.g., compute layout). We need to find the transformation $C$ that maps a hardware resource $v_A$ in the source layout to its corresponding resource $v_B$ in the destination. The same logical data $w$ must satisfy $w = Av_A$ and $w = Bv_B$.

For layout $B$ to be a valid destination, it must be surjective, i.e., its mapping can reach every element of the logical tensor. This guarantees that a right inverse $B^{-1}$ exists. By setting the expressions for $w$ equal, we find the conversion map:

\[v_B = (B^{-1}A)v_A\]The structure of the resulting matrix $C = B^{-1}A$ describes the hardware operations needed to move data. For example, if $C$ only permutes register bits, the conversion is a simple move between registers within each thread. If it mixes bits between different threads within a warp, it requires an “intra-warp shuffle,” a hardware instruction that exchanges data between threads in the same execution group.

Product Operator for Hierarchical Layout Design

The product operator constructs complex layouts from simpler component layouts. This process corresponds to creating a block-diagonal matrix. A developer can define a layout for registers within a thread ($L_{reg}$) and a layout for the threads in a warp ($L_{thr}$) separately. The product operator $L_{reg} \times L_{thr}$ combines them into a single layout $L$ for the entire warp. The matrix for $L$ is the block-diagonal composition of its component matrices:

\[L = \begin{pmatrix} L_{reg} & 0 \\ 0 & L_{thr} \end{pmatrix}\]Left Division for Matching Hardware Instructions

Left division determines if a data layout can be loaded or stored using a specialized hardware instruction. An instruction like ldmatrix loads a fixed-size tile of data from shared memory into registers. Its operation is defined by a corresponding tile layout, a small matrix $T$. This matrix maps the hardware resources for a single instruction call (e.g., the bits of the register IDs) to the data it acts upon (e.g., the bits of the memory offsets).

The compiler checks if the kernel’s larger data layout $L$ is compatible with the instruction’s tile layout $T$. This is not arithmetic division. It is a test of matrix structure. Left division $L / T$ succeeds if $L$ can be decomposed into the block-diagonal form:

\[L = \begin{pmatrix} T & 0 \\ 0 & R \end{pmatrix}\]where $R$ is the remainder layout. This decomposition confirms that a subset of $L$’s input resources maps to a subset of its output data using a transformation that is structurally identical to $T$, with no interference from the other resources governed by $R$.

If the division succeeds, the compiler can generate a sequence of ldmatrix instructions to load the data for the entire layout $L$. The tile layout $T$ governs the loading of the first block. The remainder layout $R$ is then used to calculate the starting address for the next block, and the process is repeated. This automates the use of high-throughput hardware instructions for any compatible layout.

Unification of Triton’s Layouts

In Triton’s legacy system, conversions between different layout types led to a “quadratic explosion” of custom implementations. Each pair of layouts required a unique, manually-coded conversion function. This made the compiler complex and difficult to extend, as adding a new layout type required implementing new conversion paths to all existing types.

The paper proves that all of Triton’s legacy layouts can be represented as linear layouts (Theorem 4.9). This result allows the compiler to replace the entire system of custom conversion functions with a single matrix operation. The conversion from any layout $A$ to any layout $B$ is now specified by the matrix product $B^{-1} A$.

This simplifies compiler development. To add a new layout, a developer provides its matrix definition. Conversions to and from all other defined layouts are then handled by the existing matrix product operation.

5. Optimizing Code

The framework allows a compiler to automate optimizations by translating them into questions about matrix properties.

Vectorization

Previously, Triton identified vectorization opportunities by finding a tensor’s fastest-running dimension and assuming it determined contiguity. This heuristic fails for a tensor of shape [128, 1]. The fastest-running dimension has size 1, so Triton would disable vectorization, even though 128 contiguous elements are available along the other dimension.

The linear layout method replaces this inference with a direct computation. The physical property of contiguous logical elements being stored in contiguous registers is equivalent to an identity map on their address bits.

More specifically, the compiler queries the layout’s inverse matrix, $L^{-1}$. It finds the largest integer $k$ for which the $k \times k$ sub-matrix mapping the lowest-order logical bits to the lowest-order register bits is the identity matrix. This $k$ is the precise vectorization width, calculated directly from the layout’s structure.

Broadcasting

Broadcasting is the replication of a single logical element across multiple hardware resources, such as different threads. In a reduction operation, like a sum, these duplicated values must be counted only once to avoid incorrect results.

Linear layouts provide a direct method for identifying this replication. Specifically, the duplication of a logical element across threads is represented in the layout matrix $A$ as a zero-column in the section corresponding to the thread ID bits.

A zero-column means that changing the thread ID does not change the resulting logical address $w$ in the mapping $w = Av$. The compiler inspects the matrix for this property to ensure each logical element is processed only once during a reduction.

Mixed-Precision Data Shuffling

A mixed-precision matrix multiplication computes the product of two matrices with different data types, such as mxfp4 and bf16. The overall computation is tiled, meaning it is broken down into smaller matrix multiplications on tiles of the input data. A performance bottleneck arises if the bf16 tiles cannot be loaded into registers as efficiently as the mxfp4 tiles.

The GPU hardware can execute wide vector instructions, which load a fixed-size block of data (e.g., 16 bytes) from a contiguous block of memory addresses in a single operation. The challenge is to arrange the data in memory such that all elements needed for a single compute tile are physically contiguous and can be fetched with one of these instructions.

For the bf16 data type, a standard memory layout may not have this property. The solution is a data shuffle: a pre-processing step that copies the bf16 data into a new arrangement within on-chip shared memory, organizing it so that each tile’s worth of data resides in a contiguous block of addresses.

The user specifies the logic of this shuffle with a high-level shape operation. For instance, if the hardware instruction expects a tile’s data in a layout that is logically the transpose of what a simple load provides, the user specifies a tt.transpose operation.

This shape operation is a linear transformation on the tensor’s logical coordinates and is represented by a permutation matrix, $T_{shape}$. The compiler combines this with the original layout matrix, $A_{bf16}$, to compute the new, shuffled layout $A_{shuffled}$:

\[A_{shuffled} = T_{shape}A_{bf16}\]The compiler uses this resulting matrix $A_{shuffled}$ to generate the code that performs the physical memory copy for the shuffle.

Generating Code for Layout Conversions

To convert a tensor from a source distributed layout $A$ to a destination distributed layout $B$, the compiler first computes the conversion matrix $C = B^{-1}A$. This matrix maps the source hardware resources to the destination hardware resources. The structure of $C$ determines the cheapest hardware primitive that can perform the conversion.

The compiler checks the structure of $C$ in order of cost, from cheapest to most expensive.

-

Intra-thread Register Move: This is the cheapest conversion. It is possible if the data movement is confined entirely within each thread. This is the case if the conversion matrix $C$ only permutes register bits, meaning the sub-matrix $(B^{-1}A)_{reg}$ is a permutation matrix and the rest of $C$ is the identity.

-

Intra-warp Shuffle: If an intra-thread move is not possible, the compiler checks if the conversion can be done with warp shuffles. A warp shuffle is a hardware instruction that allows threads within the same warp to exchange data directly without using shared memory. This is possible if the conversion matrix $C$ does not move data between warps, which corresponds to the warp-related block of the matrix, $(B^{-1}A)_{wrp}$, being the identity matrix.

-

Inter-warp Conversion via Shared Memory: If neither of the above conditions is met, data must move between different warps. This is the most expensive conversion and requires using on-chip shared memory as an intermediary. Data from the source warps is written to shared memory and then read by the destination warps.

Generating Warp Shuffles

When a conversion requires an intra-warp data exchange, the compiler generates a sequence of hardware shfl.sync instructions. A receiving thread executes shfl.sync(value, srcLane) to fetch a value from the registers of a sending thread, identified by its lane index srcLane. The linear layout framework provides a direct method for calculating these parameters.

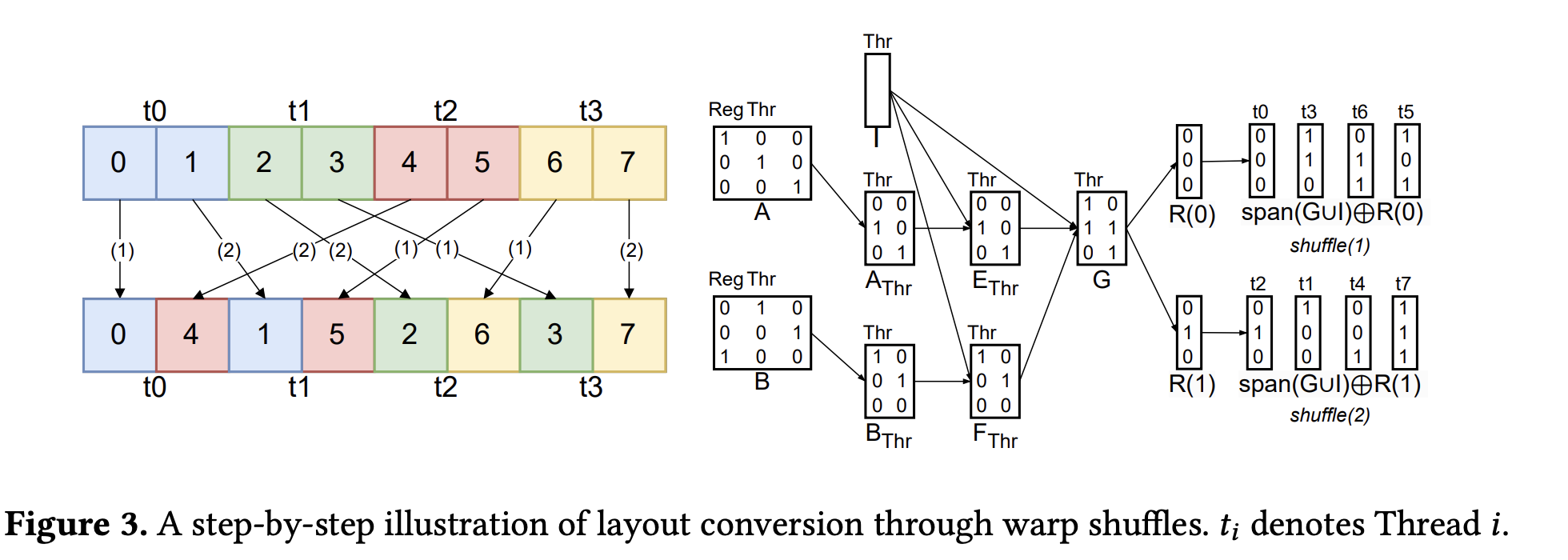

This process is illustrated by the example in Figure 3.

Figure 3: Layout conversion via warp shuffles from the paper. The labels

Figure 3: Layout conversion via warp shuffles from the paper. The labels t_i denote 3-bit logical address vectors. Note that the t0,..,t7 in the top right corner are not threads, they are just labels for the basis vectors.

1. Determine the Exchange Vector ($V$)

First, the compiler identifies data that can be moved as a single vector. This data corresponds to the intersection of the register-related column subspaces, $A_{reg} \cap B_{reg}$. Any data in this subspace maintains its relative position within the registers during the conversion.

Because this block of data is structurally invariant, it can be moved as a single unit. The compiler selects a basis $V$ for the largest possible subspace within this intersection whose total data size does not exceed the hardware’s 32-bit shfl.sync payload limit. This basis $V$ defines the vector that will be moved in each shuffle instruction.

2. Decompose the Address Space

The compiler creates a plan by decomposing the logical address space, which combines register and thread addresses. It computes bases for several subspaces.

- $I = \text{span}(A_{thr}) \cap \text{span}(B_{thr})$: Data that does not move between threads.

- $E = A_{thr} \setminus I$ and $F = B_{thr} \setminus I$: Subspaces of data to be sent and received.

- $G = {e_i \oplus f_i}$: The set of displacement vectors. The paper specifies that $G$ is constructed “After choosing an ordering for $E$ and $F$.” This implies a one-to-one pairing $(e_i, f_i)$ based on the chosen ordering of the basis vectors. This pairing is necessary because the conversion is a permutation: each source data element $e_i$ has a unique destination $f_i$.

- $R$: A basis for the intra-thread (register) address space. This is a basis for the complement of the thread space not already covered by the vectorized subspace $V$, $\text{span}(A_{thr} \cup B_{thr} \cup V)$.

3. Sequence the Exchange

A thread holds more data than one shuffle can move. The exchange is thus partitioned into rounds, one for each basis vector in $R$. A larger vectorization $V$ results in a smaller basis $R$ and fewer rounds.

In Figure 3, $V$ is empty, $R$ has two vectors, $R(0)$ and $R(1)$, corresponding to the two data elements held by each thread.

- Round 1 (